Can Rust speed up your Azure Functions?

Using Rust on Microsoft Azure Functions with web assembly

What do you think about serverless? Is it the best thing ever - or just another terrible idea? Regardless if you love or hate the term or technology, I think universal access to it is critical. And since Rust is fast, safe, and simply awesome I’ll give that a try!

Node, wasm & Rust

As a high-level recap: NodeJS is a runtime for JavaScript applications based on Google’s V8 engine. It’s highly optimized and provides a way to create JavaScript-based (incl. derivatives like TypeScript or CoffeeScript) server applications. This works just like in the browser and interprets text and compiles it at runtime (JIT).

A major drawback of this approach always has been the need to interpret the actual code to run it. As a consequence running a JavaScript application (in the browser or server) is comparatively slow and large - something that could easily be solved by shipping binary executables instead. One approach to that has now been refined by web assembly, which was built from asm.js.

Rust on the other hand is a completely different beast. It compiles to native code using the popular LLVM toolchain, which also provides flexible compilation targets (e.g. CPU architectures) by using an intermediate representation (LLVM IR) which is then further turned into native code of the desired architecture. I thought that this is a really neat idea and provides a great tool for building new languages. Other than Rust a few others (like Swift) use this approach as well!

One of such targets is - of course - web assembly. A JavaScript application can then go ahead and load the binary and call its methods:

let imports = {};

const bytes = require('fs').readFileSync('wasm_pibench_bg.wasm');

const wasmModule = new WebAssembly.Module(bytes);

const wasmInstance = new WebAssembly.Instance(wasmModule, imports);

module.exports = wasmInstance.exports;

The Rust team and community has been working hard to improve tooling around using web assembly. Projects like wasm-bindgen and wasm-pack make it dead simple to create, compile, and publish (to npm!) safe and fast Rust code. This makes it possible to run a search engine in web assembly, as well as games, or - hopefully - just about any Rust project. I hope to see more cool things coming out of that effort in the future!

Be Fearless with Serverless

Fearless concurrency has been a motto of the Rust community for a while now, maybe we can expand that to going serverless? To recap: Serverless is just a name for a plattform that only runs a function (instead of entire applications like a regular PaaS offering), which is a nice way to save on server/operating/framework/etc. maintenance and scaling! While there is someone maintaining servers for you, for simple use cases this is a great way of getting up and running quickly.

Use cases include:

- Data transformation (e.g. wrapping a JSON webservice to get CSV)

- File operation on blob storage (like creating thumbnails from every uploaded image)

- Tasks in machine learning

- Alerts in IoT applications

- Anything short running (< 5 minutes on Azure Functions)

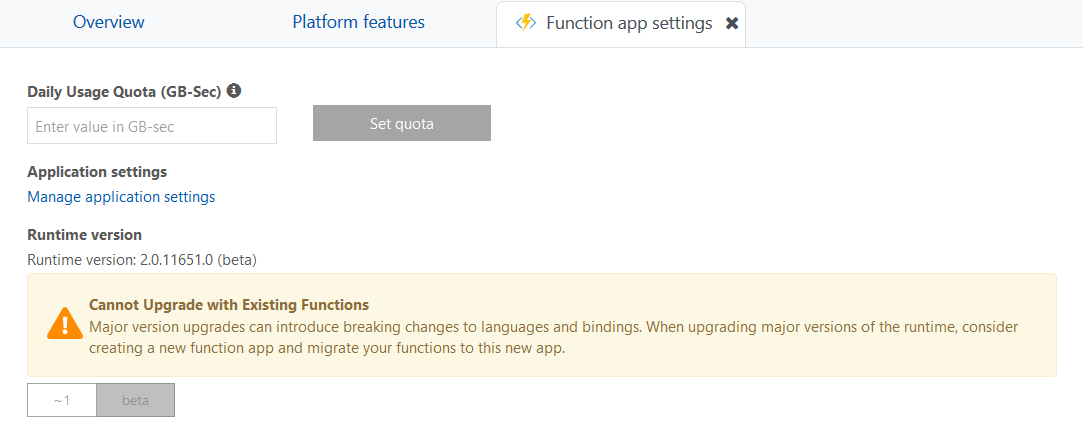

For this blog post I am going to use Azure Functions (part of Microsoft’s cloud offering), which will be based on the Azure WebJobs SDK once its runtime 2.0 is out of preview. The current version unfortunately is not open source and only runs NodeJS version 5.x, which does not support web assembly.

Let’s look into how well that works then! 😁

Sharing a Pi(e)

As a first step we need a “hard” problem, something that takes a while to compute. For this, we are going to approximate Pi by using the Monte Carlo method! It “computes” Pi like that:

(Source: Wikipedia)

Thus, the code required to achieve this is really simple and compact:

function monte_carlo_pi(reps) {

let count = 0;

for (let i = 0; i < reps; i++){

const x = Math.random();

const y = Math.random();

if (in_unit_circle(x, y)) {

count += 1;

}

}

return count;

}

function in_unit_circle(x, y) {

return (x * x + y * y) < 1.0;

}

function approximate_pi(n) {

let hits = monte_carlo_pi(n);

return hits / n * 4.0;

}

The same thing in Rust (note the annotations #[wasm_bindgen], they do the actual magic):

#![feature(proc_macro, wasm_custom_section, wasm_import_module)]

extern crate wasm_bindgen;

use wasm_bindgen::prelude::*;

#[wasm_bindgen]

extern {

fn random() -> f64;

}

fn monte_carlo_pi(reps: u32) -> u64 {

let mut count = 0;

for _ in 0..reps {

let x = random();

let y = random();

if in_unit_circle(x, y) {

count += 1;

}

}

return count;

}

fn in_unit_circle(x: f64, y: f64) -> bool {

x * x + y * y < 1.0

}

#[wasm_bindgen]

pub fn approximate_pi(n: u32) -> f64 {

let hits = monte_carlo_pi(n);

hits as f64 / n as f64 * 4.0

}

Generally, I would say these two are very much the same. The code extern { fn random() -> f64 } declares a function random() that is passed in from somewhere else - i.e. the NodeJS runtime. We can bind any function we want to that and in this case it’s Math.random().

Function setup

Azure functions can be deployed in several ways, but in order to add other files (like a wasm binary), Github is quite convenient and using the webhook re-deploy it’s a tiny CD system. For the setup to work each repository needs a defined structure, that you can find in the docs

Check out the GitHub repository for the project as another example. For this experiment, I am going to use a HTTP triggered function!

Getting the WASM

As explained in this post, the first thing to do is installing the wasm32 toolchain using rustup. With that handy, a single command will build everything:

cargo +nightly build --target wasm32-unknown-unknown --release

This produces some binary output in the target/wasm32-unknown-unknown directory that then can be processed further using wasm-bindgen:

wasm-bindgen target/wasm32-unknown-unknown/release/wasm_pibench.wasm --nodejs

By running this command, two additional files are generated that take care of the loading and export/import of all declared functions in JavaScript. Since this is an ongoing effort improve the process, look at the wasm-bindgen repository for more information!

Unfortunately I could not use the rand crate at this time since the 0.5 preview version wouldn’t build on my machine and the 0.4 version did not work with wasm (it did build though).

Local testing

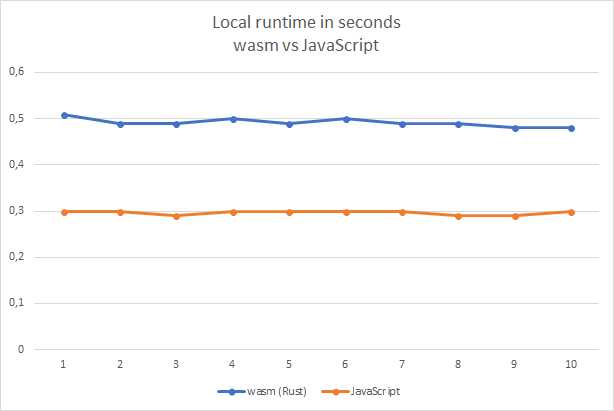

Having a .wasm file ready and the includes set up in the .js files, we can now move on to testing. The test code is simply running 10 million iterations (a random choice really) of the simulation to approximate Pi:

const rust = require("./wasm_pibench.js");

const almost_pi = rust.approximate_pi(10000000);

console.log(almost_pi);

Using time to track the elapsed time we can roughly estimate the speed of each of the implementations. Let’s see …

… no. Contrary to my expectations the local tests showed that the wasm implementation is considerably slower (0.51 vs 0.30 seconds, a more than 60% increase) than its JavaScript relative. This definitely needs more investigation! (Node version was 9.4.0)

To reproduce this experiment, you can use the local.js files in each function’s folder.

Into the clouds

First, the appropriate version of node is required. This can be done by enabling preview/beta features in the application settings.

Then an environment variable needs to be set to the appropriate version of node and after some trial and error, this was the highest version I could get:

{

http_parser: '2.8.0',

node: '8.11.1',

v8: '6.2.414.50',

uv: '1.19.1',

zlib: '1.2.11',

ares: '1.10.1-DEV',

modules: '57',

nghttp2: '1.25.0',

openssl: '1.0.2o',

icu: '60.1',

unicode: '10.0',

cldr: '32.0',

tz: '2017c'

}

This should do the trick! Let’s deploy and see how well it does compared to the local counterparts.

Loading it up

For these RESTful endpoints to use appropriate testing, I am going to use Visual Studio Team Services’ load testing tool. It utilizes Azure resources to issue specified requests to a provided endpoint and adds some nice stats and charts to it. This service is also free and I posted about it a while back.

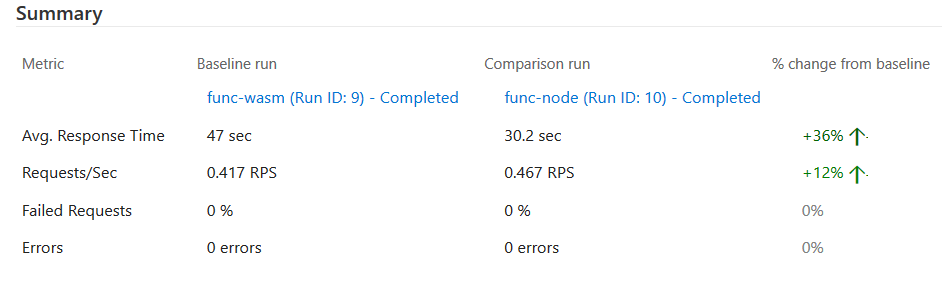

Let’s do two tests, one for each URL and check the response times. After a minute of constant load of 25 concurrent ‘users’, here’s what I got:

Same result 😢! Yet Functions are able to reproduce my local results, which means that it works well in the cloud and that the issue could be with the code itself or due to the early stage of wasm.

That’s all folks

While I am honestly quite shocked by the local performance of wasm with NodeJS, I am even more happy that it works well and it makes running small Rust projects in the cloud easier. Hopefully the performance will improve in the future (I am not sure what’s missing at this point, let me know on twitter if you know!), and I will continue to run tests and various experiments on Azure services to get Rust closer to the cloud 😁.

Do you want to try this at home? Check out the Github repository!

If you liked this kind of stuff, you can follow me on Twitter, or even better, add my RSS feed to your reader!